On April 13, 2023, we were hit hard. The University of Health Sciences and Pharmacy (UHSP) faced a serious adversary: The notorious LockBit ransomware group. It brought our operations to a screeching halt and thrusted us into a battle for our data and systems. This wasn’t just a cyberattack; it was a reality check that revealed both vulnerabilities and strengths we never fully appreciated until we were in the thick of it.

It may be wondered, „If LockBit was so notorious, why weren’t you better prepared?“. The truth is, the cybersecurity community has been conditioned to view cyberattacks as a mark of shame, something to be hidden rather than shared. This culture of silence meant we didn’t have the insights we needed to prepare adequately against this ransomware attack.

This mindset needs to change. Cyberattacks shouldn’t be seen as blemishes on our reputations but as critical experiences to be openly discussed for better industry preparedness. Transparency is key. By sharing our story, I hope to shed light on the realities of a ransomware attack and the importance of cybersecurity readiness.

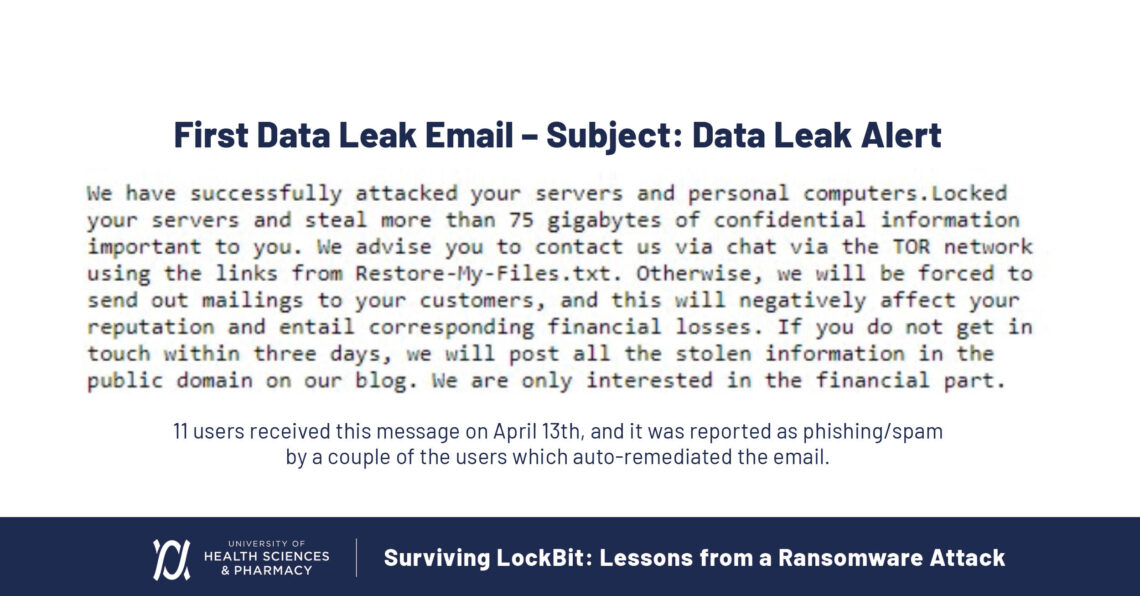

LockBit ransomware demands in the first data leak email to UHSP.

The LockBit Ransomware Attack: A Day-by-Day Breakdown

April 13th: Identification

It started on the morning of April 13th. At 4:30 am, I got the call: the university had experienced a loss of access to onsite servers and services. By 6:30 am, everything was down. At 7:00 am, not yet knowing the origin of the attack path, we began disaster recovery procedures. Later on, we discovered that eleven of our users received an email saying we’d been attacked and that 75GB of our data had been stolen. These emails weren’t reported.

To understand how we found ourselves in this situation, I’ll first review the background factors that contributed to our vulnerability. Our infrastructure was largely end-of-life, with servers and firewall equipment overdue for replacement. Although we had placed orders for new hardware, the supply chain disruptions caused by COVID had delayed their arrival. We were caught in a transitional phase, having started a cloud migration to move critical systems to Azure, but many key components were still running on outdated equipment.

Additionally, due to the end-of-life status of our firewall and prolonged supply chain issues, we had to partner with a local cloud service provider for a temporary solution. This arrangement unfortunately did not support multi-factor authentication (MFA) for our VPN, leaving a critical gap in our security. Despite our SaaS-first approach, intended to ensure that most applications were cloud-based and thus more secure, these compounding issues left us exposed.

April 14th to 23rd: Containment

By the 17th, we had managed to get 95% of our campus operational again and thought it was merely a hardware failure. On April 20th, the server environment crashed again. This time, it wouldn’t come back up, and we knew this wasn’t just a failure of end-of-life hardware.

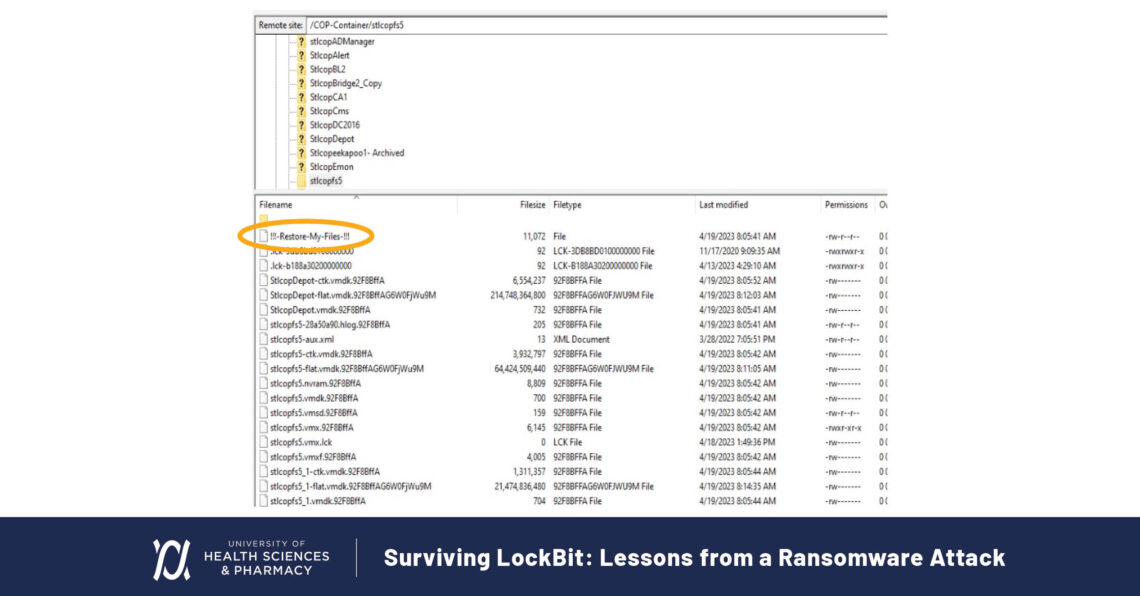

On April 21st, we found a ransomware note on the root of the hypervisor hardware, confirming the attack. At this point, we ceased recovery operations, updated leadership, and shifted to our incident response plan. The following day, after notifying the FBI, CISA, and our cyber insurance provider, I met with outside counsel. Incident response teams were brought in, and negotiations with the threat actor began.

During incident recovery and log checking, it became apparent that the attackers had compromised a user service account with a weak password and gotten in through our VPN, which wasn’t locked down. They used lateral movement to reach our server segment, extracting high-level credentials and escalating their privileges to access our hypervisor and exfiltrate our data.

While we were conducting our recovery process, they were still working their way through our systems. They managed to find and wipe our secondary backup location and created backdoor accounts to get back into our system. Our EDR systems didn’t detect anything because they don’t run on the hypervisor OS level.

April 24th to June 2nd: Recovery

LockBit provided two partial lists containing 5% of the exfiltrated file names and made an initial ransom demand of $1.25 million, claiming to have 175 GB of our data.

We learned that some PII had been stored on a compromised server where we had our local password manager. That explained how the attackers escalated their privileges. At some point, the Department of Education was notified, presumably by LockBit’s attackers to put extra pressure on us. We conferred with the department and notified our campus.

We ran into issues with backups during recovery. Our primary backups had AD authentication, and with AD encrypted, we couldn’t authenticate. Thankfully, we managed to access our tertiary backups that were not connected to our environment, along with a set of passwords kept on a paper in a physical safe in another part of our building. The safe also included a hard copy of our incident response plan, which provided details on order of restoration for critical systems. That plus our tertiary backups allowed us to restore directly to Azure. From the University’s esports athletic division, we were able to borrow a gaming PC which was used to restore the AD. This tied us over until new hardware arrived.

By June 2nd, recovery was nearly 100% complete; virtually all our systems were up and running again. Although, at this stage we weren’t yet clear on what had been lost or stolen.

Restore-My-Files note left by LockBit ransomware on UHSP servers.

June 6th-June 14th: Landing & Data Drop

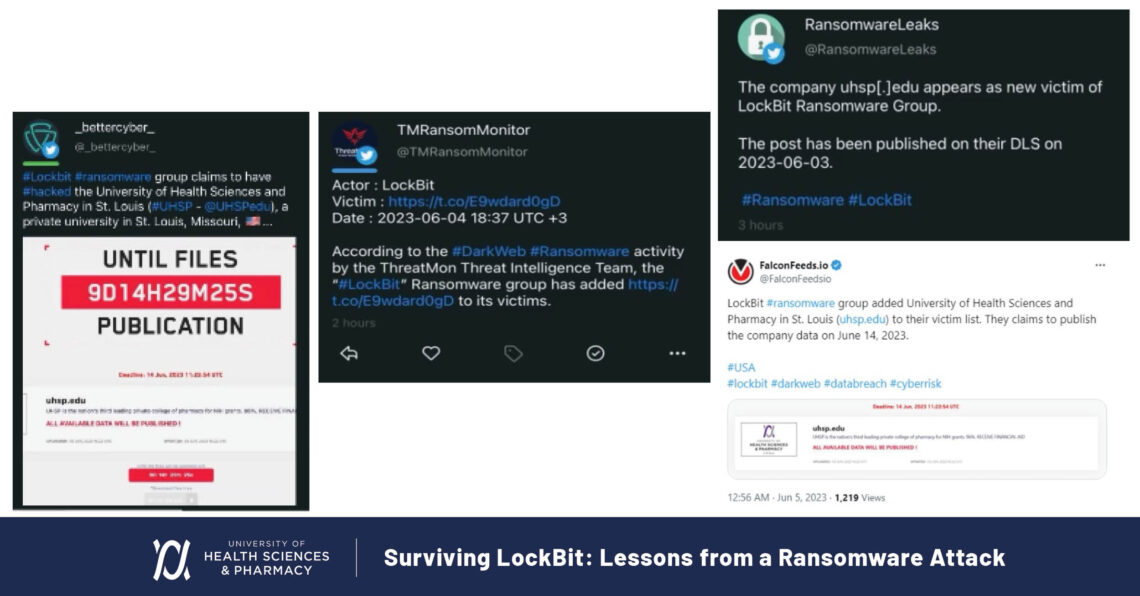

On June 6th, UHSP finally landed on LockBit’s landing page of victims. They communicated that June 14th would be the drop date should we fail to pay the ransom.

Social media reports of LockBit ransomware attack on UHSP.

At this point, LockBit’s vultures started circling on Twitter, offering decryption key services for recovery. Realizing we could recover, LockBit began to negotiate down. LockBit tried everything to spur us into action, sending emails to users and pictures of encrypted files and our ESXI hypervisor to heighten the fear of our data being posted. It was clear the clock was ticking, and LockBit was scrambling to get us to pay up. We stood firm.

Drop day arrived on June 14th, and 2.65 GB of stolen data was posted. Far from the 175 GB or even the 75 GB they had threatened us with previously. Amongst the data, all we found of note were four social security numbers and one immunization record. The owners of the compromised accounts were offered standard credit monitoring which they accepted.

And that was that. We didn’t pay a ransom and moved on, albeit taking lessons from the experience.

Lessons Learned

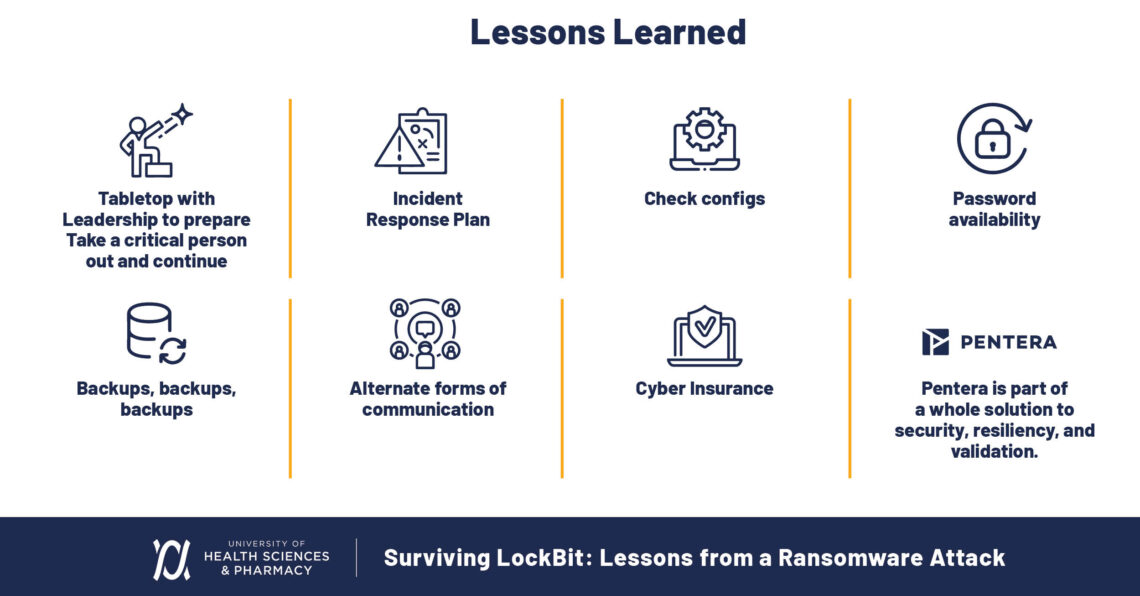

Going through the LockBit ransomware attack taught us some valuable lessons that I want to share with you:

- Always tabletop with leadership, security, and IT: Have a detailed, tested response plan in place. Ransomware attacks are a very real and significant threat to organizations, so you need a ready-made plan to put into action if you find yourself in the midst of an attack.

- Keep a printed copy of your incident response plan: In the event of a ransomware attack, things get encrypted, and you can’t access digital files. Having a printed copy of the incident response plan ensures you have a reliable reference to guide your actions during the crisis.

- Cyber insurance is a must: Having an incident response team ready to be called in was invaluable to us. Sure, rates go up a bit after an attack, but it’s a drop in the ocean compared to the price of a ransom or lost data.

- Test your resilience in an emulated ransomware scenario: Tools like Pentera would have allowed us to identify the issues we had accessing our primary backups because the ransomware was able to encrypt our AD. It was only thanks to our tertiary backup that we could recover as we did, but it would have been so much better if we could have seen and mitigated this exposure in a ransomware emulation.

- Use alternative forms of communication in a ransomware event: Internal email may be compromised. In our case, we used Gmail accounts to communicate with internal leaders, outside counsel, and incident response, which helped us deal with the situation effectively.

- Ensure multiple backup strategies and regularly test them. Our tertiary backup saved us when our primary and secondary backups were compromised. Regular testing of these backups ensures they will be functional when needed.

- Check configurations regularly: Misconfigurations can lead to vulnerabilities, as was the case with our VPN settings. Ensuring all configurations are correct and up-to-date can prevent easy access for attackers.

- Password management and availability: Make sure that you can access your passwords and that they are stored securely. Consider using a cloud-based password manager and ensure that critical passwords are accessible, even if systems are down.

- Plan for critical personnel absences: In your tabletop exercises, simulate scenarios where key personnel are unavailable. Ensure that other team members can step into critical roles if needed, maintaining continuity in your incident response.

- Proactive communication with authorities: Engage with law enforcement and relevant authorities promptly during an incident. Their support can be crucial in both managing the attack and in any legal or regulatory follow-up.

Key lessons learned from UHSP’s LockBit ransomware attack.

You’ll be better prepared and more resilient against ransomware attacks if you incorporate these lessons into your cybersecurity strategy.

A Call for Proactive Cybersecurity

The LockBit ransomware attack we experienced at UHSP was a stark reminder of the persistent threat landscape we navigate. In sharing these experiences, I hope to emphasize the need for collaboration within the cybersecurity community and the importance of encouraging a culture of openness, transparency, and continuous collaboration. By discussing these incidents openly, we can better prepare ourselves for future challenges and ensure we stay resilient and protected.

Understanding the sequence and impact of ransomware attacks can help organizations defend against them more effectively and recover more swiftly when they occur. Stay vigilant, stay prepared, and stay proactive when it comes to your security.

To make sure your security is prepared to block and tackle ransomware attacks and learn how you can eliminate ransomware exposure, read up on our Ransomware Ready offering.